tl;dr: all workshop materials are available here:

https://rstd.io/conf20-intro-ml

🔗 https://conf20-intro-ml.netlify.com/

License: CC BY-SA 4.0

At rstudio::conf(2020) in January, I was lucky to lead a new two-day workshop called “Introduction to Machine Learning with the Tidyverse.” This workshop was designed for learners who are comfortable using R and the tidyverse, and curious to learn how to use these tools to do machine learning using the modern suite of R packages called tidymodels. If you just read that last sentence and don’t yet know the word “tidymodels” yet, it is a collection of modeling packages that, like the tidyverse, have a consistent API and are designed to work together specifically to support the activities of a human doing predictive modeling (which includes machine learning).

If you have heard or used the caret package, tidymodels is its successor. The development of tidymodels is supported by RStudio, and the team is led by Max Kuhn, the author of caret, Cubist, C50 and other R packages for predictive modeling. Max has offered an Applied Machine Learning workshop for several years now, but we have not yet attempted to teach tidymodels to a beginner audience. Until now! 🎉

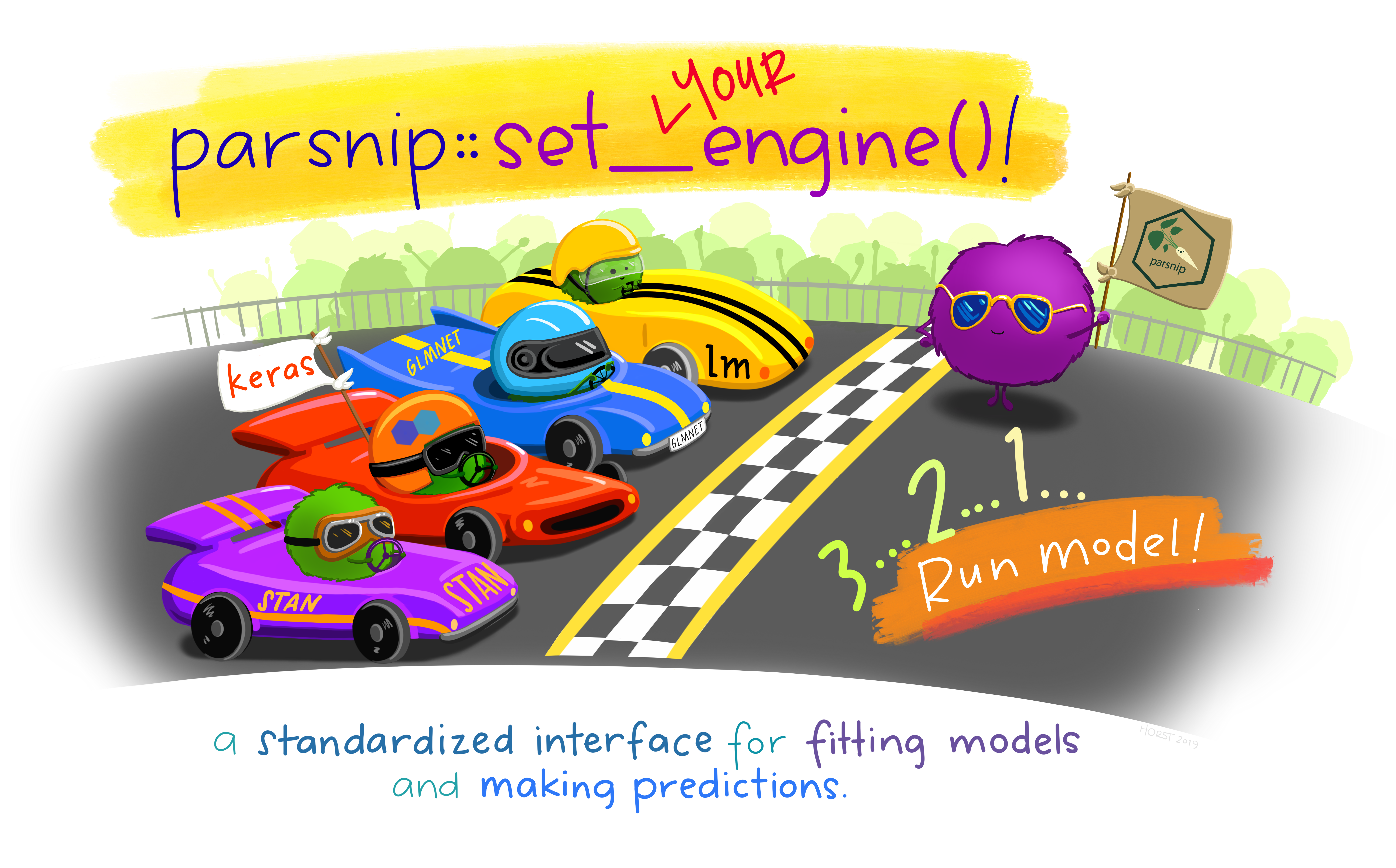

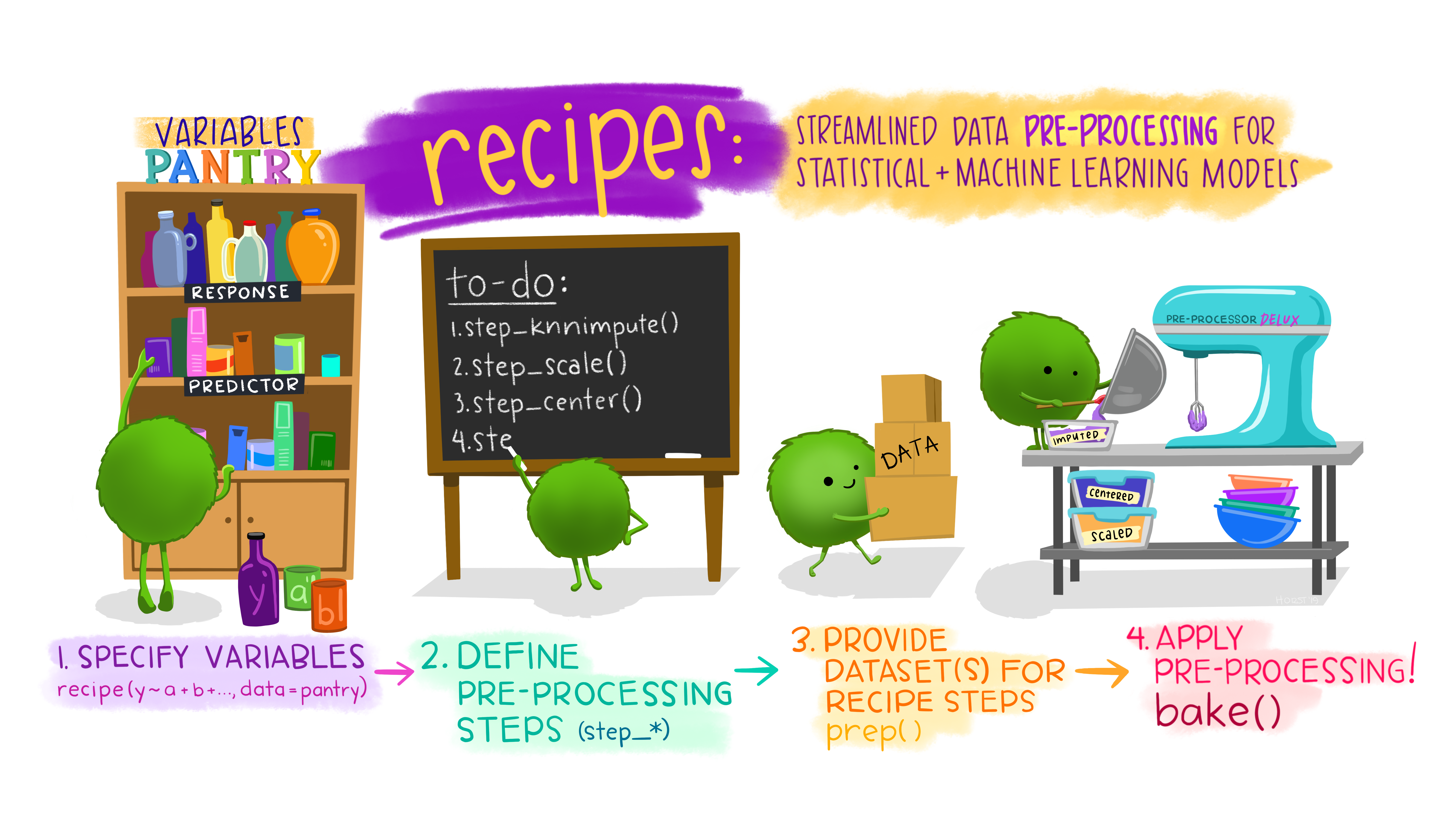

My colleague Garrett Grolemund and I designed the workshop to provide a gentle introduction to supervised machine learning: concepts, methods, and code. Attendees learned how to train and assess predictive models with several common machine learning algorithms, as well as how to do feature engineering to improve the predictive accuracy of their models. We focused on learning intuitive explanations of the models and best practices for predictive modeling. Along the way, we introduced several core tidymodels packages including parsnip, recipes, rsample, and tune, which provide a grammar for modeling that makes it easy to the right thing, and harder to accidentally do the wrong thing.

Prerequisite Knowledge

Before workshops for this year’s conf were announced, we framed two questions to help potential learners gauge whether this workshop was the right one for them:

-

Can you use mutate and purrr to transform a data frame that contains list columns?

-

Can you use the ggplot2 package to make a large variety of graphs?

If you answered “no” to either question, you can brush up on these topics by working through the online tutorials at https://rstudio.cloud/learn/primers.

These questions were driven by the fact that when we started developing the workshop, using tidymodels required fairly advanced purrr skills; see an end-to-end code example from Max’s Applied Machine Learning workshop at rstudio::conf(2019) here.

However, between the time we first conceived of the workshop and when we taught it, a lot of the tidymodels API had changed (for the better). In hindsight, I would reframe with these questions (rationale in italics):

-

Have you used R for statistics, that is, doing hypothesis tests or another kind of inferential modeling? Comfort with at least

lmand hopefully more packages/functions for modeling is helpful. -

Can you use the pipe operator to combine a sequence of functions to transform objects in R (like a data frame)? Tidymodels code uses pipes, but tends to be more for combining functions within a single package rather than across packages.

-

Can you work with tibbles (or data frames) that contain list columns? Tidymodels code generally returns tibbles, often with list columns that you need to get comfortable with.

-

Can you use

dplyr::select()helper functions? This helps when composing recipes for feature engineering.

Packages

We set up RStudio Server Pro workspaces for all workshop attendees, which provided more horsepower for running some of the more computationally intensive models, and which came pre-loaded with all the workshop exercises as R Markdown files and the packages needed to do them pre-installed. For those who wished to follow along on their local machine, we provided the packages needed as prework.

The code made heavy use of packages from the tidyverse and tidymodels:

install.packages(c("tidyverse", "tidymodels"))

Like the tidyverse, tidymodels is a meta-package that bundles most of the building blocks we needed:

library(tidymodels)

## ── Attaching packages ────────────────────────────────────────────────────────────────────────────────── tidymodels 0.0.3 ──

## ✓ broom 0.5.3 ✓ purrr 0.3.3

## ✓ dials 0.0.4 ✓ recipes 0.1.9

## ✓ dplyr 0.8.4 ✓ rsample 0.0.5

## ✓ ggplot2 3.2.1 ✓ tibble 2.1.3

## ✓ infer 0.5.1 ✓ yardstick 0.0.4

## ✓ parsnip 0.0.4.9000

## ── Conflicts ───────────────────────────────────────────────────────────────────────────────────── tidymodels_conflicts() ──

## x purrr::discard() masks scales::discard()

## x dplyr::filter() masks stats::filter()

## x dplyr::lag() masks stats::lag()

## x ggplot2::margin() masks dials::margin()

## x recipes::step() masks stats::step()

## x recipes::yj_trans() masks scales::yj_trans()

Two tidymodels packages were not yet on CRAN at the time of the workshop. We installed the development versions of workflows and tune from GitHub.

# install once per machine

install.packages("remotes")

remotes::install_github(c("tidymodels/workflows",

"tidymodels/tune"))

# load once per work session

library(workflows)

library(tune)

We also used some non-tidymodels packages as well:

install.packages(c("kknn", "rpart", "rpart.plot", "rattle",

"AmesHousing", "ranger", "partykit", "vip"))

# and

remotes::install_github("tidymodels/modeldata")

Teaching Infrastructure

-

RStudio Server Pro: Our RStudio Server Pro workspaces used Amazon compute optimized

c5.largeinstances with 2 vCPUs and 4 GiB memory for each learner. -

Slides: I used the xaringan package to build all my slides in R Markdown. The source files all live within the

site/static/slidesfolder of the repository.- For a xaringan tutorial, you can see my rstudio::conf(2019) workshop slides here.

- I also highly recommend the countdown package, which I used to create the exercise timers ⌛.

-

Workshop website: I used the blogdown R package to build the website, with the Hugo academic theme with a custom CSS designed by Desirée De Leon. If you want to re-use my workshop website (you’ll need GitHub and Netlify accounts), click on Deploy to Netlify button at the top of my

README🚀

In the rest of this post, I’ll walk you through the materials available through the workshop website:

Materials

The workshop consisted of 8 sessions. In each session, we presented slides interspersed with timed group activities and independent coding exercises. All of these links are also available on our website.

| session | Slides | Materials |

|---|---|---|

00 |

||

01 |

||

02 |

||

03 |

||

04 |

||

05 |

||

06 |

||

07 |

||

08 |

Instructor Notes

We did a trial run of this workshop in December 2019 in Boston with about 20 participants, which proved one of Greg Wilson’s cardinal rules:

“Remember that no lesson survives first contact with learners…”

Actually, the workshop went pretty smoothly for a first run, and we received positive feedback from our attendees. But, like any good educators, Garrett and I decided that a content renovation would make the workshop even better. This decision was driven by a few observations:

-

We realized that the process of using a fitted model object for generating predictions was pretty new to many attendees. We needed to spend more time on this, so we beefed up our early section on predicting considerably. This meant that parsnip was the first tidymodels package we introduced, which felt right! Parsnip probably should be the first tidymodels package to learn/teach to new users (previously, we had started with rsample).

-

Many attendees were less familiar with resampling methods in general, and in particular with bootstrap resampling. Since bootstrapping is such a key concept, we pushed cross-validation later and added an earlier section on sampling and resampling.

-

To lay out the red carpet for ensembling (we worked up to bagging and random forest models), we spent some time working with and interpreting single decision trees, including a “Guess the Animal” team activity that helped to loosen everyone up on day 1.

-

A new, but very much welcome, kid on the tidymodels block appeared just before our workshop in December: workflows. For conf, we re-factored our approach to introduce workflows by bundling together formulas and parsnip model specifications first (via

add_formula()andadd_model()), then introducing recipes as a way to move beyond formulas and do feature engineering (substitutingadd_formula()withadd_recipe()instead). -

To accomodate the new and improved content reorganization we envisioned, we hit a few code hiccups. Garrett and I made an executive decision to write some helper functions so that the code just worked and we kept the content on track. These were the earliest fitting functions we used on day 1, before transitioning to

tune::fit_resamples()andtune::tune_grid()on day 2 after introducing cross-validation.As an educator, this is typically something I try to avoid if possible, as my goal is to guide learners to be able to use the package APIs as designed independently. But debugging this specific error introduced too much “inessential weirdness” because:

- we would have needed to describe things that were not really necessary to understand, and

- these things were likely to alienate people (you can follow a discussion and reprex of one error here).

Bottom-line: If you are trying to follow these slides on your own, open the accompanying exercise files for each slide deck and run the first chunk locally (look out for our helper functions named

fit_data()andfit_split()). If you want to take the training wheels off and use the base tidymodels functions, you may run into similar errors, but roughly:fit_data()=parsnip::fit()fit_split()=tune::last_fit()

-

Finally, we re-worked most of our exercises (within R Markdown documents) to provide code templates that were either “fill-in-the-blanks” or “fix me” (i.e., replace or add arguments to already written code). On the first run, it became abundantly clear that, because tidymodels code can be verbose, we wore learners out with too much typing. In fact, we wore ourselves out typing. To reduce the typing (and cognitive) load, we tried to adopt a no-code-chunk-left-blank strategy so that learners did not feel like this at the end:

Figure 3: Video from YouTube.

What would I change?

In hindsight after teaching this material twice, I would try to make room for a final case study with a new dataset so that learners get a chance to create a full predictive modeling pipeline, from the initial split to the last fit. To make room for a case study, I would try to get workflows and recipes to join forces a bit earlier. One of the clearest benefits of using workflows is that you don’t need to spend too much time monkeying around with the prep, bake, and juice functions from the recipes package, so we could shorten the bridge between presenting these two packages considerably. I also think that cross-validation and tuning could be more closely aligned timing-wise, since tuning with tidymodels is only possible with resampled data.

Thanks

I sincerely enjoyed developing this workshop with Garrett, getting a chance to work closely with Max Kuhn and Davis Vaughan of the tidymodels team (now including the inimitable Julia Silge!), and having the opportunity to introduce a new cohort of R and tidyverse users to tidymodels. I hope the materials we developed are useful to learners and other educators too—if they are, please let me know, I’d love to hear about it.

And most of all—thanks to our Boston and San Francisco workshop participants! You all were a pleasure to model with.

Happy predictive modeling!

Special thanks

This workshop was made possible by an ⭐ all-star ⭐ TA team- you can find out more about them on our workshop website.